Introduction

A conversation bot allows users to interact within multiple forms like text,

adaptive cards, forms, etc., from the Microsoft Teams client.

A bot in Microsoft Team can be part of a one to one conversation, group

chat, or a channel in a team. Each section has its own opportunities and

challenges.

Conversational Bots

Conversation bot is a series of messages between the users and the bot.

There are three types of conversation within the Microsoft Team Client:

- Personal Conversation or Personal Chat: It's a one to one chat between users and bot.

- Group Conversation or Group Chat: It's between a bot and two or more users.

- Teams Channel: It's available to all channel team members, any team member can do a conversation.

Bots behave differently depending on the type of conversation, for

example:

- Personal conversation i.e. one to one conversation doesn't need @mention. All messages sent by the user directly routed to the bot.

- Teams Channel or Group Conversation requires users to use @mention to invoke the bot into the channel or group chat.

Prerequisites

To build Microsoft Teams Bot, below are the necessary tools needed to

install at your environment:

- Microsoft Azure Subscription

- Office 365 Tenant

- Microsoft Team enable at Office 365 tenant and allowed the custom app to upload

- Node.js (V10.* or higher)

- NPM (V6.* or higher)

- Gulp (V4.* or higher)

- Yeoman (V3.* or higher)

- Yeomen Generator of MS Teams

- Visual Studio Code

Let's start with Azure Bot

To create Microsoft Team Bot requires two activities:

- Create Microsoft Azure Bot.

- Add a bot to MS Team-based code base.

Step 1 - Create Microsoft Azure Bot

- Browse the https://portal.azure.com with your work or school account.

- Click "+Create Resource"

- Select "AI + Machine Learning"

- Select "Web App Bot"

Step 2 - Complete all required information and click create to

provision the bot

- Bot handle:- its unique name of your bot.

- Subscription:- Select a valid available subscription.

- Resource Group:- select an appropriate resource group or create a new one as per requirement.

- Location:- Select your closed azure location or the preferred location.

- Pricing:- Select the required pricing tier or F0 as free for POC activity.

- App Name:- Leave it as-is for this demo purpose.

- Service Plan:- Leave it as-is for this demo purpose.

- Bot Template:- Leave it as-is for this demo purpose.

- Application insight: Select off for this demo purpose

- Microsoft App Id and Password:- Leave it as-is for this demo purpose.

- Click create to provision.

Step 3 - Enable Microsoft Team Channel for your Bot

In order for the bot to interact with MS Teams, you must enable

the Teams Channel.

To complete the process, MS Teams and Webchat should be available

and should be listed in your channel lists.

Step 4 - Create a Microsoft Teams App

In this section, we are going to create a Node.js project

- Open the Command Prompt and navigate to the desired directory to create a project.

-

Run the Yeomen Generator for Microsoft Teams

yo teams

Step 5 - Update Default Bot Code

Here we will find a 1:1 conversation response.

Browse Interactive ChatBotBot file

Step 6

To implement this functionality, locate and open the

./src/app/interactiveChatBotBot/InteractiveChatBotBot.ts file

and add the following method to the InteractiveChatBotBot class:

Add the below code after the import statement

- import * as Util from "util";

- const TextEncoder = Util.TextEncoder;

- import {

- StatePropertyAccessor,

- CardFactory,

- TurnContext,

- MemoryStorage,

- ConversationState,

- ActivityTypes,

- TeamsActivityHandler,

- MessageFactory,

- } from 'botbuilder';

Locate the handler onMessage() within the constructor().

Locate and replace the line if (text.startsWith("hello")) { in

the onMessage() handler with the following code:

- if (text.startsWith("onetoonepersonalchat"))

- await this.handleMessageMentionMeOneOnOne(context);

- return;

- } else if (text.startsWith("hello")) {

Add the following method to the class:

- private async handleMessageMentionMeOneOnOne(context: TurnContext): Promise<void> {

- const mention = {

- mentioned: context.activity.from,

- text: `<at>${new TextEncoder().encode(context.activity.from.name)}</at>`,

- type: "mention"

- };

- const replyActivity = MessageFactory.text(`Hi ${mention.text} from a one to one personal chat.`);

- replyActivity.entities = [mention];

- await context.sendActivity(replyActivity);

- }

Locate and open the file ./.env.

Locate the following section in the file and set the values of

the two properties that you obtained when registering the bot:

- # App Id and App Password fir the Bot Framework bot

- MICROSOFT_APP_ID=

- MICROSOFT_APP_PASSWORD=

Step 8 - Update Manifest File

- Browse manifest.json file ./src/maifest/manisfest.json

- Update below properties:-

- ID: Replace with Azure bot ID

- Version: 1.0.0

- PackageName : InteractiveBotBot

- Bot Property

- "bots": [

- {

- "botId": "b0edaf1f-0ded-4744-ba2c-113e50376be6",

- "needsChannelSelector": true,

- "isNotificationOnly": false,

- "scopes": [

- "team",

- "personal"

- ],

- "commandLists": [

- {

- "scopes": [

- "team",

- "personal"

- ],

- "commands": [

- {

- "title": "Help",

- "description": "Shows help information"

- },

- {

- "title": "mentionme",

- "description": "Sends message with @mention of the sender"

- }

- ]

- }

- ]

- }

- ],

Step 9 - Test and Run the Bot

From VSCode select View -> Terminal and Hit below command

- gulp ngrok-serve

Microsoft Team requires all content to be displayed with an

HTTPS request. Local debugging requires local HTTP webserver.

Ngrok creates a secure routable URL to HTTP webserver to debug

the HTTPS application securely.

Ngrok created a temporary URL "14dceed6815b.ngrok.io". The

same needs to be updated at Azure Bot with Message endpoint as

per the below screenshot.

- Select Setting under Bot Management

- Update Messaging End Point with Ngrok URL

- Click save to update.

Step 9 - Upload Manifest File

Navigate to manifest folder /src/manifest. Select both

.png files and manifest.jon file and zip all three files.

Give the name of zip file "manifest"

Login to https://teams.microsoft.com

- Navigate to App Studio

- Select Import an existing app

- Select the manifest.zip file

- Bot name with the icon will appear at the screen

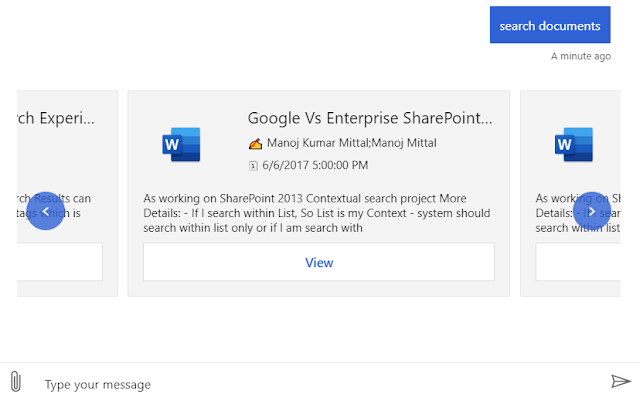

Step 10 - Install Custom App to Teams

Solution

Using the app bar navigation menu, select the Mode

added apps button. Then select Browse all apps

followed by Upload for me or my teams.

In the file dialog that appears, select the Microsoft

Teams package in your project. This app package is a ZIP

file that can be found in the project's ./package

folder.

Click Add or Select app to navigate to chat with the

bot

Select the MentionMe command, or manually type

mentionme in the compose box, then press enter.

After a few seconds, you should see the bot respond

mentioning the user you are signed in with,

GitHub Repository Link:- https://github.com/manoj1201/MSTeamDevelopment/tree/master/ConversationalBot-MSTeams

Same Article Published here

also

I hope you have enjoyed and learned something

new in this article. Thanks for reading and stay

tuned for the next article.